Overview of workflows and data approaches in bioproduction – an industry perspective

Posted: 28 August 2020 | Melanie Diefenbacher (Genedata) | No comments yet

Downstream processing is an integral part of the production process of biopharmaceuticals and contributes quite significantly to the overall productivity and product quality, as well as to processing costs. Melanie Diefenbacher of Genedata provides a comprehensive overview of downstream processes, highlighting several challenges and the importance of investing into automation and digitalisation to ensure efficient processing.

Why are biopharmaceuticals so important in pharma?

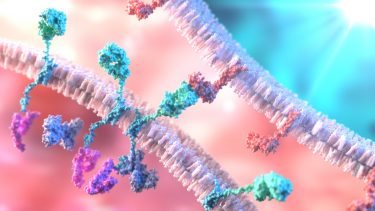

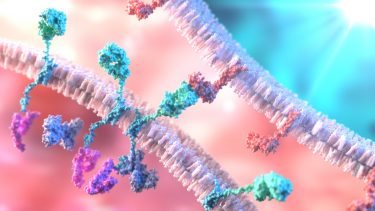

Biopharmaceuticals have many advantages compared to traditional small molecule-based drugs. They offer avenues to treat specific diseases and patient groups in a highly tailored manner, which means that they can address previously untreatable conditions while also possessing greater potency and specificity, consequently causing fewer side effects.

Existing biopharmaceutical formats are continuously evolving and becoming more and more sophisticated. Today, they comprise classical therapeutic monoclonal antibodies (mAbs), multi-specific antibodies, which simultaneously bind to more than one target structure and alternative scaffolds for molecular recognition. Additionally, continued research is providing entirely new formats such as those applied in cell and gene therapy. However, biopharmaceuticals present operational and technological challenges. Producing these large and complex molecules in a reliable matter at an industrial scale requires manufacturing capabilities of a previously unknown sophistication. In addition, long process durations, low yields, costly raw materials and the need for highly skilled experts for operations makes it very costly to operate production facilities. Despite these challenges, the annual growth rate for biopharmaceuticals is double that of conventional small-molecule drugs and this is expected to continue.

Clearly, biopharmaceuticals represent an important part of the drug portfolio of pharmaceutical companies. To be successful, these companies need to invest into automation and digitalisation of biopharma R&D processes to efficiently discover new biopharmaceuticals and develop the resulting manufacturing processes.

Can you provide an overview of workflows in bioproduction?

The technology roadmap created by the BioPhorum Operations Group provides a comprehensive overview not only of the facility types and base core processes that have been traditionally utilised by the biopharma industry, but also of concepts that are currently being established.

The BioPhorum Operations Group distinguishes between five facility and associated product types:

- Traditional large-scale facilities with stainless‑steel bioreactors of more than 10,000L volume where upstream processing is done in fed-batch mode and downstream processing in batch mode, providing relatively high cell culture expression titres (∼3g/L) and purification recoveries. This type of facility typically is used to produce less-complex proteins such as mAbs, mAb fusions and other recombinant proteins with low degrees of glycosylation.

- Large- to intermediate-scale facilities with single-use bioreactors of up to 2,000L volume where the upstream processing is done in perfusion mode and the downstream processing in batch mode. These facilities are utilised to produce mAbs and/or non-mAbs, which are more complex proteins such as therapeutic enzymes.

- Intermediate-scale and/or multiproduct facilities using single-use technology and running upstream and downstream processing in batch-mode. This type of facility typically produces more complex proteins such as bispecifics, multicomponent biologics or conjugates.

- Small-scale portable facilities using single-use technology where upstream processing is conducted either in batch or continuous mode and downstream processing is in continuous mode. These facilities are used to produce potentially highly complex biologics such as vaccines or allogeneic cell therapeutics.

- Parallel processing facilities for personalised medicine where isolated, patient-specific preparation of therapeutic proteins or biological therapies (eg, potentially highly complex biologics such as autologous vaccines [ie, vaccines derived from the individual patients] or gene or cell therapeutics) is carried out typically using manual cell culture or cell preparation and downstream processing in batch.

Why is downstream processing such a vital part in the manufacture of biopharmaceuticals?

What are the main challenges with this process and how are companies adapting/changing to address these? What trends do you expect to see in this area over the next five years?

Like upstream processing, downstream processing consists of a series of unit operations including different filtration and chromatography steps. Along these multiple steps, various factors often related to limited capacity pose a challenge to productivity and product quality in downstream processing. The productivity of upstream processes has greatly increased over the past decades due to process intensification in upstream processing, as well as improvements in cell engineering and media composition. In downstream processing, for example, resin chromatography columns, which have slow mass transfer rates, were over‑sized to allow for the higher flow rates necessary to increase productivity. Such an over-sizing strategy is costly and inflexible. To decrease costs and increase productivity and flexibility, there is a movement towards employing continuous processing, which in turn causes other downstream processing challenges. Although many technologies addressing these downstream processing challenges are already available, their implementation for biopharmaceutical production is still limited, especially when it comes to large-scale production.

Producing these large and complex molecules in a reliable matter at an industrial scale requires manufacturing capabilities of a previously unknown sophistication”

Potential solutions for addressing the capacity discrepancy between upstream and downstream processing could be a shift towards alternative harvesting methods such as flocculation or to employing continuous capture via multi-column periodic countercurrent processes. Also, it has been shown that precipitation/ flocculation can yield purities similar to affinity chromatography using the classical Protein A as a capturing agent. Furthermore, multimodal chromatography, where a hydrophobic moiety is incorporated into the ligand structure for ion exchange chromatography, is superior to classical polishing methods as it offers the simultaneous clearance of high molecular weight species, host cell proteins and nucleic acids. Finally, membrane chromatography units (single- or limited-use), provide a cost‑effective and highly productive alternative to resin-based chromatography.

On the other hand, with the rise of personalised medicine and smaller biopharmaceutical product campaigns, the need for flexible and scalable, multi-product facilities is increasing. In these cases, the problem of limited capacity is less pronounced. The biopharmaceutical industry faces the challenge of adjusting to this paradigm shift in demand. Biopharmaceutical production using flexible, smaller volume facilities, as well as the pressure to lower economic costs driven by the rise of biosimilars, will lead to increased use of single-use technologies and systems to achieve higher flexibility and lower costs.

Can you talk a bit about the data produced in the process and how this has changed over the last few years?

The final drug substance resulting from downstream processing needs to be of high purity and needs to meet set quality specifications for patient safety reasons. The yield also needs to be as high as possible for economic reasons. Today, these goals are typically achieved by ‘quality by design’ (QbD) approaches which have a great impact on data management in downstream processing, as well as other bioprocessing areas. The QbD philosophy means that the quality of the products is ensured by process design and control instead of by constantly checking at interim steps and at the end. This new approach requires the definition of process design spaces, elaborate process controls, and in silico process modelling. Therefore, more and more experiments are being performed during manufacturing process development. In particular, miniaturisation and scale-down models, eg, for filtration and chromatography steps, allow for a much higher degree of automation and parallelisation, which in turn results in huge data volumes that need to be captured, processed and interpreted to identify the optimal process for a given product. The data need to be collected in a highly structured way, which facilitates the application of statistical and predictive methods for the design of optimal processes. Several typically collected downstream processing unit operations process parameters are flow rates, pressures, volumes, buffer conductivity and pH.

The productivity of upstream processes has greatly increased over the past decades due to process intensification in upstream processing, as well as improvements in cell engineering and media composition”

Modern process analytical techniques (PAT) allow the measurement of the concentration or composition of the biopharmaceuticals on- or at-line during a chromatography run (eg, via directly connected high-performance liquid chromatography and spectroscopy methods). The resulting information enables more granular process monitoring. Finally, the product quality is tested by drawing samples during and at the end of the downstream process.

Product quality data typically comprise analytical chromatography results, mass spectrometry data and outcomes of biophysical methods (eg, dynamic light scattering and differential scanning calorimetry for assessment of molecular integrity and stability). Besides the technological improvements for all these methods, it is remarkable that mass spectrometry in combination with analytical liquid chromatography (LC-MS) is becoming an increasingly important analytical method, since many critical product quality attributes can be measured simultaneously by this technology (product integrity, heterogeneity, post translational modifications such as glycosylation, deamidation, etc.). Development groups such as those at Amgen call the LC-MS method the multi-attribute method, which reduces the number of assays used for process development and in-process control. Product quality assessment additionally includes data from functional assays data such as binding characteristics using ELISA, surface plasmon resonance (SPR) or biolayer interferometry (BLI), cellular signal induction or proliferation inhibition tests.

All in all, during process development, large amounts of data are being produced with many scale-down models, which more realistically emulate manufacturing conditions and allow more process variants to be tested, compared and analysed. Such broad data sets open up avenues to systematically develop manufacturing processes. In addition, scientists and engineers recognise the value of adopting a holistic view and being able to take data from cell line and upstream process development into account when evaluating downstream processes.

Finally, the increasing number of specialised therapeutic modalities makes downstream process, as well as the overall process development, more complex. The modalities include highly engineered molecules such as bispecific antibodies, fusion proteins, novel scaffolds and other novel molecule types. In addition, novel cellular therapeutics in immuno-oncology, such as TCR T and CAR T cells, pose their own process development challenges.

Can you explain the difference between continuous and batch methods? What are the pros and cons to both?

In general, a batch process run can be divided into a fixed set of phases resulting in one drug substance batch as an outcome. For example, during a classical Protein A capture step in batch mode, a column is equilibrated and loaded with the product to loading capacity then washed, eluted, cleaned, regenerated and stored until the next use. The eluted drug substance can be applied to the next downstream processing step.

There are clear advantages of using continuous procedures in downstream processing, which enforce automation. Continuous processes could, for example, help to alleviate bottlenecks stemming from increased upstream processing titers, aid in the production of unstable molecules, support the establishment of standardised processes in mid- to small-scale facilities, reduce development timelines, reduce the risk of human errors and, ultimately, reduce costs and increase productivity.

However, batch processes also certainly provide advantages. The initial set-up cost, for example, is lower than for continuous processes. In addition, batch processes can more easily be adjusted to products that have special requirements.

How are/will downstream bioprocesses adapt to CAR T and gene therapies?

The QbD philosophy means that the quality of the products is ensured by process design and control instead of by constantly checking at interim steps and at the end”

The most common gene therapy products currently being developed are adeno-associated viruses (AAVs). The AAV particles are relatively small (20–25nm diameter) and stable. Common filtration and chromatography methods can be applied while keeping adventitious agents out. A challenge is that AAV particles need to be concentrated manifold (>100 fold) while maintaining high purity in order to reach drug substance volumes reasonable for administration to patients. In addition, if the AAV capsids do not contain vector DNA, it is hard to distinguish full and empty particles in downstream processing.

Downstream processes dealing with cellular therapeutics are very different from downstream processes for protein and viral products. For instance, one of the most prominent cellular therapeutics today are CAR T cells. After growth in bioreactors, the cells need to be harvested by detachment from surfaces and concentrated by cell washers prior to cryopreservation.

About the author

Melanie Diefenbacher is a Scientific Consultant, responsible for deployment, implementation and ongoing support of Genedata’s biopharma platforms at major biopharmaceutical companies. Previously, she managed a cell culture team at Biogen´s biologics manufacturing facility in Switzerland where she ensured operational readiness for the opening of the new site. Prior to that she built up and headed the Fermentation Technology Platform at Evolva. Melanie was educated at the University of Hohenheim and the Max Planck Institute in Tübingen and has a PhD in Biochemistry.

Issue

Related topics

Antibodies, Biopharmaceuticals, Bioprocessing, Bioproduction, Downstream, Processing, Production, Quality by Design (QbD)