From quantum to clinic: AI reshapes clinical trials

Posted: 3 February 2026 | Dr Mark Lambrecht (SAS) | No comments yet

The burden of conducting clinical trials is a significant hurdle in drug discovery. Here, Dr Mark Lambrecht reveals the technological developments that are driving shifts in this space.

For life sciences leaders looking ahead to 2026, the future is coming into focus. Quantum-enhanced toxicology models, regulatory sandboxes built on synthetic clinical data, AI-driven personalised enrolment and fully decentralised trials are all moving from concept to reality.

Yet behind these headline trends sits a quieter shift. Artificial intelligence (AI) is steadily changing how clinical trials are designed, run and regulated.

That shift is already visible in the structure of today’s studies. The modern clinical trial looks very different from the paper-heavy, site-centric model of the past. Decentralised and adaptive designs are becoming mainstream, real-world evidence is gaining regulatory acceptance and wearable devices are streaming patient data in near-real time. Alongside this, organisations are blending machine learning with traditional statistical programming to generate insight earlier in development.

The promise is compelling: faster studies, stronger recruitment and more reliable evidence. But it also exposes a hard truth: without the right analytical foundations, AI introduces complexity rather than clarity.

The pressure on trial infrastructure

Regulatory scrutiny has never been greater. Frameworks such as FDA 21 CFR Part 11 and EU Annex 11 impose strict controls on the systems used in trials, while national requirements dictate when and how results must be submitted. As of January 2025, the EU mandates a joint clinical assessment for new medicines, which account for a more comprehensive evidence package including real-world observational data in addition to evidence from randomised clinical trials.

When regulators ask how a conclusion was reached, “the model said so” is not enough”

Layer AI onto this and expectations rise further. Models that guide patient selection or flag safety risks must be transparent, version-controlled and auditable. When regulators ask how a conclusion was reached, “the model said so” is not enough.

From trial design to personalised enrolment

Against that backdrop, AI is beginning to reshape clinical development in practical ways.

In trial design, both traditional inferential statistics and machine learning are being used to analyse historical studies and real-world datasets to refine inclusion criteria and endpoints. Increasingly, the same techniques are applied to patient-level data, combining genomics, clinical history and treatment patterns to identify suitable trial participants. This brings the industry closer to genuinely personalised enrolment, improving efficiency while giving patients a better chance of being matched to the right study.

During execution, AI models monitor operational data to predict site performance, flag deviations and anticipate dropout risk. Decentralised trials rely on integrating electronic medical records, genomics and wearable data, creating a multimodal environment in which AI can thrive, provided the underlying data infrastructure is sound.

After the trial, analytics and agentic AI approaches inserted into human oversight are streamlining regulatory reporting by automating tables, listings and figures, while ensuring every output can be traced back to source data.

Quantum AI enters the clinic

Beyond today’s machine learning models, a more radical shift is approaching. In 2026, quantum machine learning is expected to move from academic research to integrating quantum-enhanced insights into the preclinical and Phase I safety assessment pipeline, particularly in predictive toxicology.

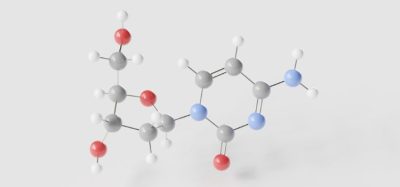

Classical AI struggles to simulate the molecular complexity that determines whether a compound will be safe in humans. Quantum approaches, meanwhile, promise to model these interactions more accurately, allowing teams to flag toxicity risks earlier in discovery and preclinical phases. Given that late-stage safety failures remain one of the most expensive causes of attrition, even modest improvements here could have a profound impact on development timelines. Moreover, they could lead to capital preservation, a better internal rate of return and investment in other viable candidates.

While quantum techniques point to what may soon be possible, most organisations still need safe, controlled ways to test today’s AI models in real-world trial environments. As AI techniques become more sophisticated, regulatory approaches are evolving in parallel. Regulatory-approved sandboxes built on synthetic clinical data are emerging as safe environments in which organisations can test models, simulate trials and prototype decision-support tools without breaching privacy laws or healthcare regulations.

These sandboxes offer a bridge between innovation and compliance. They allow teams to pressure-test new approaches before live deployment, accelerating validation while containing risk.

Unstructured data and collaboration

One of the largest untapped opportunities lies in unstructured information”

One of the largest untapped opportunities lies in unstructured information. Patient notes, investigator correspondence and free-text adverse-event reports contain signals that rarely make it into formal analysis. Techniques such as natural language processing and retrieval-augmented generation are now making this material usable, allowing AI to surface relevant evidence while maintaining governance and auditability.

Meanwhile, a lack of foundational standardisation makes collaboration difficult, slowing progress at precisely the point when speed matters most. Cloud-based, GxP-compliant platforms are becoming the connective tissue of this ecosystem. They allow teams to work in familiar tools while ensuring consistent access, version control and audit trails across organisations.

Looking ahead

The transformation of clinical trials through AI is not about replacing statisticians or automating judgement. It is about giving experts better tools, accountable AI, higher quality data and clearer visibility across the development process. AI agents are acting as copilots that surface evidence for a human to sign off, rather than black boxes making independent decisions.

As the industry moves towards quantum-enabled safety screening, personalised enrolment, regulatory sandboxes and fully decentralised trials, one thing becomes clear: these future-gazing innovations all depend on analytical environments that are flexible, auditable and built for collaboration. The organisations that thrive will be those quietly investing in that foundation, including a data fabric that is ready for this type of analysis, enabling the next wave of clinical research to be trusted, scalable and sustainable.

About the author

He and his team operate on the intersection of research, information science and analytical cloud technology to solve advanced analytical and AI challenges at hospitals, pharmaceutical manufacturers and various health care organisations.