The impact of automation on drug discovery

Posted: 21 July 2007 | | No comments yet

Automated systems and modern pharmaceuticals have both had a hugely positive impact on human life. While these technologies developed in parallel with one another during roughly the same time period in the early 20th century, they didn’t interact until automation found its way into the laboratory in the 1970s.

Automated systems and modern pharmaceuticals have both had a hugely positive impact on human life. While these technologies developed in parallel with one another during roughly the same time period in the early 20th century, they didn’t interact until automation found its way into the laboratory in the 1970s.

Automated systems and modern pharmaceuticals have both had a hugely positive impact on human life. While these technologies developed in parallel with one another during roughly the same time period in the early 20th century, they didn’t interact until automation found its way into the laboratory in the 1970s.

Since that time highly automated robotic systems such as High Throughput Screening (HTS) operations, compound repositories and parallel synthesis chemistry labs have become ubiquitous in core laboratories and are increasingly being found in academic and institutional environments. However, it is surprisingly difficult to measure the impact of automation as an isolated entity; as they have always incorporated automated systems, the settings that employ robotics on a large scale must either be assessed holistically by looking at the impact of the combined technology, or at a lower level by utilizing productivity measures that are less global. This problem is compounded by the fact that the details of core operations in pharmaceutical companies are closely held as a matter of proprietary.

These caveats aside, one cannot dispute that automated systems have had a major impact on the drug discovery process. But a clearer definition of automation must be provided before delving too deeply into an assessment of its’ benefits; setting aside data automation systems and focusing on hardware alone, many common devices can be classified as automation. However, let us make the distinction that equipment must perform a function autonomously to be considered automated; this excludes most common laboratory equipment. The remaining devices, though potentially requiring human initiation and intervention, are clearly “Automation”. Automated equipment can be further divided into three primary categories: hand-held, unit/work cell and integrated automation1. Each of these have valuable uses in the Discovery Laboratory, however, as I will explain , the distinction between them is not as clear as it might be on a factory floor.

Hand-held automation generally describes devices which not only require human participation to function, but must be held and controlled during operation. Some examples of this type of equipment can be found in the multitude of automated single and multichannel pipetters, as well as handheld barcode scanning devices and their counterparts. The former have had a huge impact on laboratory productivity by facilitating dispensing into microplates. These devices allow one to perform repeat aliquoting and to add reagents in rows and columns of microplates. Hand held automatic pipettes have enabled the use of microplate technologies in such areas as screen development where the application of large scale automation would be inappropriate, as well as in academic and small companies where such equipment might be too expensive. But as the density of microplates has increased, the utility of hand-held automation has decreased. Skilled laboratory personnel can hand pipette into 96 and 384 well plates but higher densities such as the 1536 well plate are very difficult to handle manually on a routine basis. Hand-held bar-code scanners are another example, enabling mobile sample tracking and inventory control, often utilizing wireless links to laboratory information management systems (LIMS) for real time sample management. These allow two way communications for inventory control of compound management and reagent systems as well as functioning as a mobile interface for a company LIMS.

Unit automation continues to be the most commonly used type of automation, not just in drug discovery but in all industrial applications. Unit automation is also called ‘work station’ or ‘work cell automation’; as its’ name implies, this term is generally used to describe any automated device that performs a single operation or unit of work unattended. This type of equipment also makes up the subsystems of fully integrated automated systems and, admittedly, the distinction between the two is very unclear. In laboratory automation this term is usually applied to all bench-top devices that have the capacity to conduct processes unattended including, but not limited to, most of the common readers and detection devices found in biological laboratories, as well as liquid handling X-Y-Z robots. But there are an increasing number of workstations, particularly liquid handling devices that also incorporate basic plate moving capabilities and plate reading devices in addition to their basic pipetting function, allowing them to perform entire processes unattended (Figure 1).

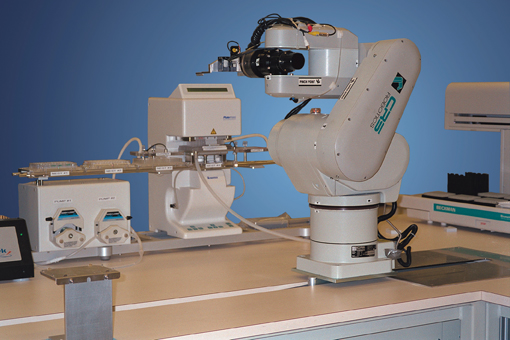

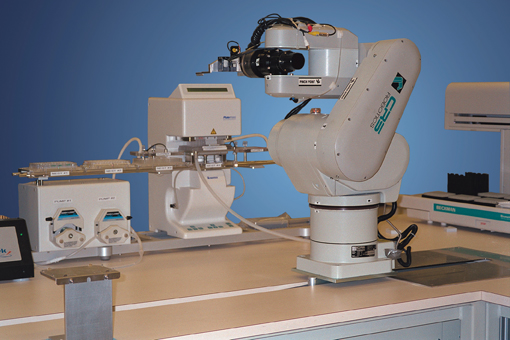

These devices fall somewhere between unit and full automation and are better classified by their application; are they devices being used to perform a complex assay, compound preparation protocol or chemical synthesis step unattended or are they performing a single function as part of a disconnected assay process where a human or another robotic unit transfer work to from station to station? For example, once loaded with source plates and reagents, the devices shown in Figures 2 and 3 are capable of processing a very large number of samples without human intervention. If the SciClone instrument (Figure 2) were being utilized in a library management setting, it would be considered a fully automated device because it can perform all the necessary plate moving and transfer operations in creating mother and daughter plates.

But it is being utilized as part of an HTS operation where it performs the first processing step following transfer of plates from the library management group. After the plates are processed and sorted into units of work, the stacks are moved to the dispensing system shown in Figure 3 where reagents for up to 6 assays are added in parallel. Any incubation and subsequent addition steps occur in these stacks. At the end of the assay protocol, each stack represents a single biological assay and can be read or further processed as a unit of work. All of these operations can occur in parallel thereby increasing the throughout of the entire operation and making it scaleable and flexible.

The picture formed in most peoples’ minds when discussing automation is either a science fiction/I-Robot style humanoid or a robotic welder assembling an auto chassis, (Surprisingly, the latter is usually a unit operation within an assembly line). In fully automated systems, unit automation is integrated with a device for transferring work between stations, in drug discovery this will generally entail moving microplates or tubes between devices which perform operations such as liquid handling, incubation, shaking or detection. By connecting each individual operation, complex biological assays can be automated for complete walk away operation.

The complexity of fully automated systems can range from simple plate feeders to large systems with multiple robotic arms. Equipment manufacturers and integrators have developed systems with a variety of architectures but the robotic arm remains the most common, with conveyer systems a close second. The introduction of robotic fingers that are capable of gripping and moving microplates (Figure 4 and 5) for existing industrial arms was first made feasible by the integration of modular unit automation.

In the early days of laboratory automation robotics systems were primarily assembled and sold by professional Integrators whose business was the creation and assembly of these custom systems rather than the manufacture of equipment. These bespoke automated solutions were each created to meet the requirement specifications of each customer as an individual solution. As a result the maintenance and any post installation changes required potentially expensive contracting with the integrator or an internal engineering staff member. The introduction of control software capable of scheduling tasks and conducting error recovery in the mid 1990’s allowed customers to make limited process changes without changes to hardware or software. As reliability and ease of programming improved, it became possible for a single automated solution to meet most of the needs of a discovery lab engaged in HTS. The market then followed a natural evolution toward standardized systems offered by multiple equipment vendors; today one can purchase a turnkey automated solution for most applications in biological assay automation, compound library management and chemical synthesis and expect reliable operation and very short set up times.

The simplest analysis of the impact of automated technology would be to examine the relative outputs from each of the types previously described, this analysis has been performed many times2-4 with no clear conclusion, the results are frequently counterintuitive. One would expect that a fully automated system would always be much more efficient and operate at a higher rate, but the systems employing unit automation can offer equal throughputs and only require incremental increases in personnel. On the other hand, fully automated systems can offer timing precision and duration of operation advantages that can’t be obtained with human attended systems. Likewise, when handling large amounts of radioisotopes, infectious materials, or biological hazards; the reduction of exposure to laboratory personnel more than justifies the use of walk away automated systems.

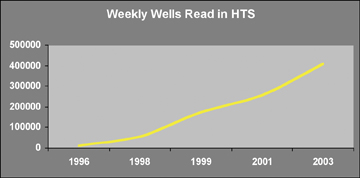

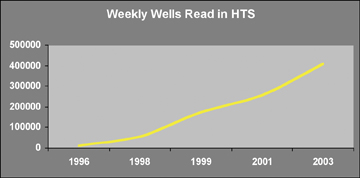

The question therefore remains open; what impact has automation had upon drug discovery? At a HTS meeting in 1992, the highest reported throughput was 9,216 samples per day in three assays5, in the following ten years this number increased ten fold (Figure 6), largely driven by increased efficiencies found through automation.

HTS labs today can routinely test 1 million samples per week and usually employ a mixture of fully automated and workstation based systems. More complex automated solutions have reported throughputs as high as 500,000 samples per day6,7. Increased chemical library sizes have enabled these extremely high throughputs; creating an entirely separate set of automation solutions to manage chemical libraries8,9. In both of these areas the increased throughput allowed by automation has also been utilised to increase the quality of the data generated; in bio-assays, replication and dose response generation becomes affordable as throughputs increase, and in chemical library management quality control can be increased.

A final, often overlooked benefit of automation in the biological sciences is that the application of automated processes has driven a parallel increase in the recognition of the value of fixed processes themselves; the utilisation of automated techniques in the development of biological assays is a perfect example10,11. The benefits of this are relevant to may areas; standardizing assay development processes allows a smoother transition to fully automated operation and allows the utilisation of smaller scale automation earlier in the process. This leads to an increase in the quality and quantity of data surrounding an assay for decision making, and reduces the failure rates in full high throughout operation. But a more direct impact of process standardization is that it specifies a much more detailed list of experimental parameters and establishes a statistical quality requirement for each, thereby ensuring that anomalous or ambiguous results will be investigated, resulting in better data. Last but not least, the combination of process and automation in assay development allows many assays to be developed in parallel, thereby greatly increasing efficiency.

Even when expensive automated systems are not utilised, process optimisation can have a very positive impact; by incorporating very basic hand-held or unit automation with microplate based column and row wise reagent additions, significant reductions in development times and increased data quality can be obtained. As more and more researchers become connected with facilities employing automated techniques both in industrial and academic environments, researchers have become more aware of their power. In academic institutions this can be largely credited to the widespread application of genomic research and core sequencing laboratories.

Automation can also have negative aspects; one must be very careful that basic parameters are not changed when automating a process, particularly a biological assay. Seemingly simple changes, such as the order of reagent additions or proportional volume scaling can have a major impact on the relevance of results. Another consideration is that once a process is automated, it becomes fixed. In manufacturing it is not uncommon to establish an automated process and have it remain constant for years. In HTS this is rarely the case, the average duration of a HTS screen can be measured in weeks or months; this means that when fully automated systems are employed they must either incorporate sufficient peripheral devices to run most assay types, or multiple systems each specialized for an assay format must be deployed. Fully flexible systems are costly and must provide sufficient throughput to justify the investment. In the latter case, there must be enough similar targets in the testing pipeline to replace existing targets on automated systems; this can lead to prioritization decisions driven by convenience rather than true corporate strategy. Offsetting this is the increasing requirement for automation support in the areas downstream of HTS such as Lead Optimization, assays that are developed to support the testing of medicinal chemistry compounds need to produce comparable results over several years. As the industry continues to move toward testing these molecules in parallel profiling assays in order to establish selectivity during the chemistry optimization process, the increased data demands are leading to the deployment of HTS assays and automated solutions in medium throughput environments.

Conclusion

In conclusion, while it has been said that the throughput problem in drug discovery has been solved, it appears that the industry continues to show an increased hunger for data. The increased volumes and quality of data in pharmaceutical databases provide a rich resource for computation approaches and empirical shortcuts, provided that high quality standards can be maintained. But perhaps the biggest change today is the exponential increase in the hardware sophistication of academic researchers, as automated systems are more heavily utilised in all aspects of the discovery process, innovation is also accelerating. Initiatives such as the NIH Roadmap Initiative12 are not only increasing the recognition and usage of automated processes but making the large quantities of data generated available in the public domain. Automation has moved from a novelty to a commonly used tool, as researchers in all areas continue to integrate automated solutions into their everyday environment, we are seeing a shift in the scientific method, rather than gathering data iteratively and moving stepwise through hypothesis – experiment – conclusion, scientists today gather large datasets that explore multiple hypotheses in a single experiment, then utilize computational tools to explore many conclusions and raise new questions in a collaborative, global environment.

Figure 1: Biomek FX™ (Beckman Instruments) configured with one 384-well pipetting head, one Span-8 pipettor integrated to a PlateCrane robotic arm (Hudson Control). This instrument is capable of performing complex plate to plate transfer as well as flexible reagent addition protocols in a walk away operation mode.

Figure 2: SciClone™ (Caliper LifeSciences) liquid handler interfaced to three stacker and rail systems. This system is optimized for performing plate to plate transfers for High Throughput Screening. One rail with 2 stackers provide source plates in a 384 well format while two additional rails with 10 stackers each provide destination plates. The system is configured with one 384 well pipetting head and an 8 tip flexible dispenser.

Figure 3: LiquidZilla (Amphora Discovery) automated dispensing system. This system has a single rail with 12 stackers and can add 6 reagents independently to each of 6 - 384 well micro-plates.

Figure 4: Zymark TwisterTM robotic arm with plate gripping fingers (Caliper LifeScience). This was one of the earliest finger designs and is still widely used.

Figure 5: CRS robotic arm (Thermo Scientific) integrated in an assay system with peripheral devices capable of performing fully automated biological assays.

Figure 6: Average weekly throughput in High Throughput Screening laboratories in wells tested per week. Compiled from data contained in SBS podium presentation and High Tech Business Decisions annual survey of HTS managers13.

References

- Hopp and Spearman (1996) Factory Physics, Richard D Irwin, Chicago.

- K. Menke, Unit Automation in High Throughput Screening Methods and Protocols (2002) (W Janzen editor), Humana, Totowa, NJ.

- M.A. Sills, (1997) Integrated robotics vs. task-oriented automation, Journal of Biomolecular Screening, 2, 137-138.

- M. Banks (1997) High throughput screening using fully integrated robotic screening, Journal of Biomolecular Screening, 2, 133-135.

- F. Leichtfreid (1992) Novel Approaches to High Throughput Screening Automation, Data Management Technologies in Biological Screening, SRI International.

- J. Inglese et.al. (2006) Quanititative high-throughput screening: a titration-based approach that efficiently identifies biological activities in large chemical libraries, PNAS, 31, v103, 11473-11478.

- http://www.kalypsys-systems.com/

- W. Keighley and T. Wood, Compound Library Management – An Overview of an Automated System in High Throughput Screening Methods and Protocols (2002) (W Janzen editor), Humana, Totowa, NJ.

- U Schopfer et.al.,Screening Library Evolution through Automation of Solution Preparation, Journal of Biomolecular Screening, (in press, published online May, 2007).

- M.W Lutz,. et al (1996) Experimental design for high-throughput screening. Drug Discov. Today, 1, 277-286.

- P Taylor, Stewart, F. et al (2000) Automated Assay Optimization with Integrated Statistics and Smart Robotics. Journal of Biomolecular Screening 5, 213-225.

- NIH website or white paper.

- Sandra Fox, High Tech Business Decisions, private communication.

Bill Janzen

Mr. Janzen received his educational training in Physics from the University of North Carolina. He managed a comparative endocrinology lab at the University of North Carolina until 1989 when he joined Sphinx Pharmaceuticals, then a start-up biotechnology company. Following Eli Lilly and Company’s acquisition of Sphinx in 1994, Mr. Janzen became Director of Lead Generation Technologies and Operations for Eli Lilly and Company’s Sphinx Laboratories site in the Research Triangle Park, NC. Throughout his career, Mr. Janzen has championed the use of industrial processes and quality control in drug discovery. Internal processes at Sphinx and Lilly were first and best in class and demonstrated the success of industrialization in a research environment. He then highlighted them via key presentations and publications to facilitate acceptance across the industry. Mr. Janzen assembled the leading publication on High Throughput Screening, “High Throughput Screening, Methods and Protocols”, Humana Press.